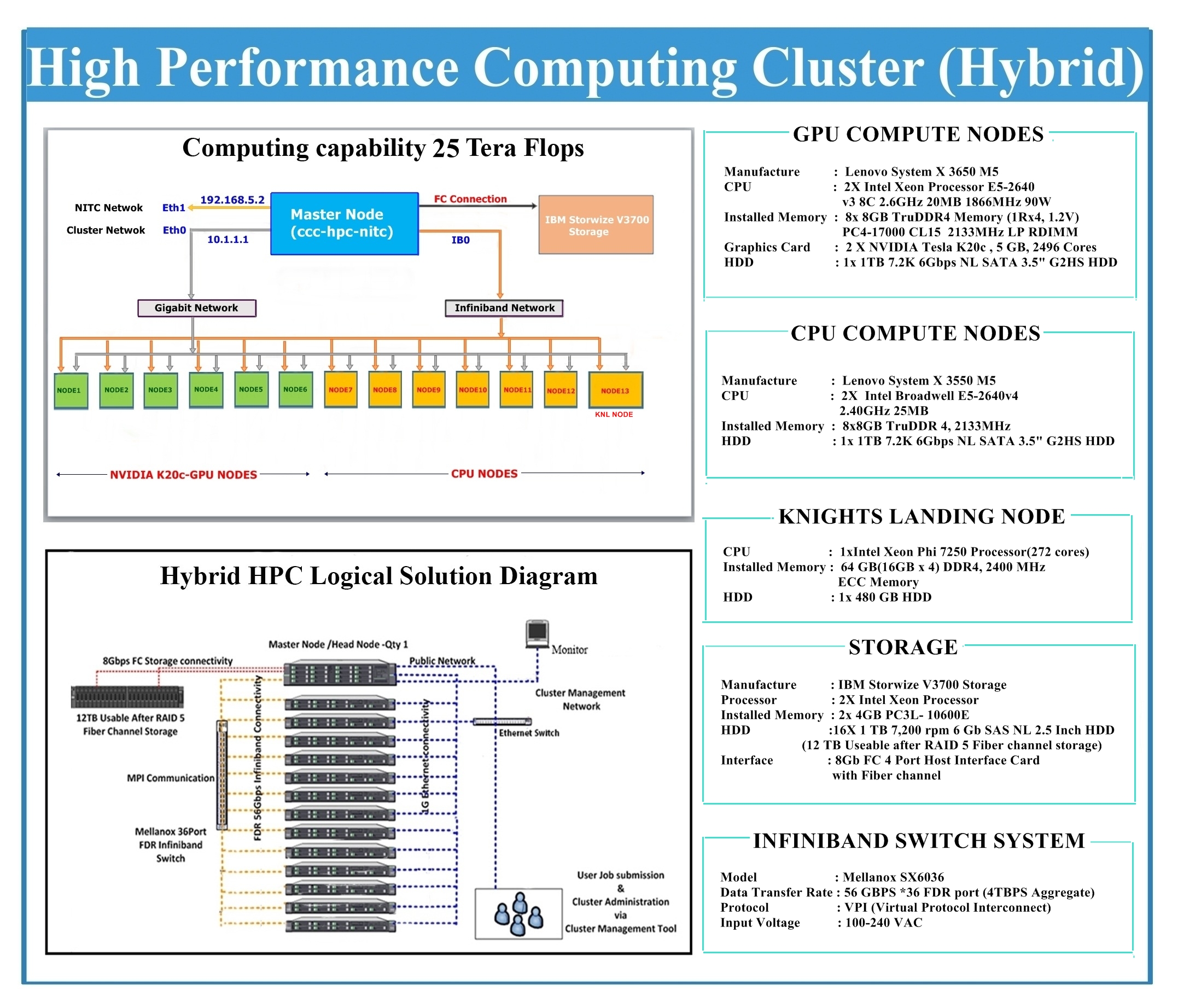

HPC Cluster is a group of computing nodes joins computational powers of them to provide a more combined computational power. Therefore, cluster computing utilizes multiple machines to provide a more powerful computing environment perhaps through a single operating system. In its simplest structure, as said above the HPC clusters are intended to utilize parallel computing to apply more processor force for the solving the problem. Though from the outside the cluster may look like a single system, the internal workings to make this happen can be quite complex. The idea is that the individual tasks that make up a parallel application should run equally well on whatever node they are dispatched on. However, some nodes in a cluster often have some physical and logical differences. A High-Performance cluster at Central computer center is shown in Figure below has a 14 node architecture; One master node, 6 GPU Compute node, 6 compute nodes, 1 Xeon Phi (KNL) node. The details of these nodes are given below. The detailed specification of these are also given in the table.

HPC have complex environment, and administration of each individual segment is essential. The administration node gives numerous capacities, including: observing the status of individual nodes, issuing administration orders to individual nodes to right issues or to give orders to perform administration capacities, for example, power on/off. One can not underestimate the importance of cluster management. It is an imperative when trying to coordinate the activities of a large numbers of systems

A compute node is the place where all the computing is performed. Most of the nodes in a cluster are ordinarily compute nodes. With a specific end goal to give a general arrangement, a compute node can execute one or more tasks, taking into account the scheduling system.

A GPU node is a compute node with an additional GPU cards as accelerators. The Data parallel jobs offloaded into this node will be send to these accelerators for speedup.

A KNL node is a compute node with an additional Xeon Phi processor as accelerator in it. The parallel jobs offloaded into this node will be send to Xeon Phi for faster execution.

Applications that keep running on a cluster, compute nodes must have quick, dependable, and concurrent access to a storage framework. Storage gadgets are specifically joined to the nodes or associated to a brought together the storage node that will be in charge of facilitating the storage demands.

The HPCC in Central Computer Centre is designed with 13 compute nodes in which one node is Knights landing node. Details are as follows

| : | | Lenovo-System X 3650 M5 |

| : | | 2U Rack mountable server |

| : | | 2X Intel Xeon Processor (E5-2640 v3 8C 2.6GHz,20MB 1866MHz 90W) |

| : | | 8x 8GB TruDDR4 Memory (1Rx4, 1.2V) PC4-17000 CL15 2133MHz LP RDIMM |

| : | | 1x NVIDIA K20c, 5GB, 2496 Cores |

| : | | 8x 1TB 7.2K 6Gbps NL SATA 3.5" G2HS HDD |

| : | | 64-bit CentOS 6.7 |

| : | | Broadcom Corporation NetXtreme BCM5719 Gigabit Ethernet PCIe |

| : | | Mellanox Technologies MT27520 Family (FDR) |

| : | | QLogic 8Gb FC Dual-port HBA with Fiber channel |

| : | | Lenovo Integrated Management Module (IMM) |

| : | | Lenovo System X 3650 M5 (X 6 Nos.) |

| : | | 2U Rack mountable server |

| : | | 2X Intel Xeon Processor E5-2640 v3 8C 2.6GHz 20MB 1866MHz 90W |

| : | | 8x 8GB TruDDR4 Memory (1Rx4, 1.2V) PC4-17000 CL152133MHz LP RDIMM |

| : | | 2x NVIDIA K20c, 5GB, 2496 Cores |

| : | | 1x 1TB 7.2K 6Gbps NL SATA 3.5" G2HS HDD |

| : | | 64-bit CentOS 6.7 |

| : | | Broadcom Corporation NetXtreme BCM5719 Gigabit Ethernet PCIe |

| : | | Mellanox Technologies MT27520 Family (FDR) |

| : | | Lenovo Integrated Management Module (IMM) |

| : | | Lenovo System X 3650 M5 (X 6 Nos.) |

| : | | 2U Rack mountable server |

| : | | 2X Intel Xeon Processor E5-2640 v3 8C 2.6GHz 20MB 1866MHz 90W |

| : | | 8x 8GB TruDDR4 Memory (1Rx4, 1.2V) PC4-17000 CL152133MHz LP RDIMM |

| : | | 1x 1TB 7.2K 6Gbps NL SATA 3.5" G2HS HDD |

| : | | 64-bit CentOS 6.7 |

| : | | Broadcom Corporation NetXtreme BCM5719 Gigabit Ethernet PCIe |

| : | | Mellanox Technologies MT27520 Family (FDR) |

| : | | Lenovo Integrated Management Module (IMM) |

| : | | Lenovo System X 3550 M5 |

| : | | Dual 64 Bit Processor from x86 family, Intel Broadwell E5-2640v4 |

| : | | 8x8GB TruDDR 4, 2133MHz scalable upto 1.5TB |

| : | | L3 Cache, 25MB |

| : | | FDR port with 3 Mtr copper cable |

| : | | 500GB, SATA disk scalable up to 8 disk |

| : | | Remote Management with IPMI 2.0. |

| : | | TPM 1.2 |

| : | | Redundant and Hotswap energy efficient (90%) Power Supply |

| : | | Redundant and Hotswap |

| : | | Advance Failure analysis support on systems for CPU, memory, HDD, Power supply and fans. |

| : | | 1 x Intel Xeon Phi 7250 Processor(272 cores) |

| : | | 64 GB(16GB x 4) DDR4, 2400 MHz ECC Memory |

| : | | Two 1GbE network ports with PXE boot capability |

| : | | Single port FDR with cable |

| : | | 8x 8GB TruDDR4 Memory (1Rx4, 1.2V) PC4-17000 CL15 2133MHz LP RDIMM |

| : | | 480 GB |

| : | | Remote Management with IPMI 2.0. |

| : | | Fully certified/compatible with latest RHEL 7.x |

| : | | 80 Plus Platinum or better certified powersupply with IEC 14 type Power cables |

| : | | Half width 1U or equivalent (e.g. 4 servers in 2U) rack mountable |

| : | | IBM Storwize V3700 Storage |

| : | | 2X Intel Xeon Processor |

| : | | 2x 4GB PC3L- 10600E |

| : | | 16X 1 TB 7,200 rpm 6 Gb SAS NL 2.5 Inch HDD (12 TB Useable after RAID 5 Fiber channel storage) |

| : | | 8Gb FC 4 Port Host Interface Card with FC |

| : | | Lenovo RackSwitch G7028 |

| : | | 128 Gbps switching throughput (full duplex) Latency of 3.3 microseconds 96 Mpps |

| : | | 24 × 1 GbE (24 RJ-45), 4 × 10 GbE SFP+ |

Central Computer Centre,National Institute of Technology,calicut 673601

maincc@nitc.ac.in

0495 228 6852, 0495 228 6854